UW Course Calendar Scraper

I’ve had the idea of making a self-updating, navigable tree of Waterloo courses. This is the first step. (Actually, not the first step for me. It started with Django, which had to do with my last work report’s comparison to Zen Cart. Some credit goes to Thomas Dimson for inspiration. He made the Course Qualifier.) The main idea for this step is to gather all the information to be stored in a database. With that (the idea and plan) begins the coding phase:

class UcalendarItem(Item):

course = Field()

name = Field()

desc = Field()

prereq = Field()

offered = Field()

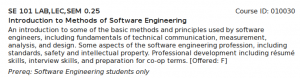

I wanted to gather the course (“SE 101”), name (“Introduction to Methods of Software Engineering”), desc (“An introduction …”), prereq (“Software Engineering students only”), and offered (“F”) each as separate fields

In order to do that, I wrote a spider to crawl the page:

from scrapy.selector import HtmlXPathSelector

from ucalendar.items import UcalendarItem

class UcalendarSpider(BaseSpider):

domain_name = "uwaterloo.ca"

start_urls = [

"http://www.ucalendar.uwaterloo.ca/0910/COURSE/course-SE.html"

]

def parse(self, response):

hxs = HtmlXPathSelector(response)

tables = hxs.select('//table[@width="80%"]')

items = []

for table in tables:

item = UcalendarItem()

item['desc'] = table.select('tr[3]/td/text()').extract()

item['name'] = table.select('tr[2]/td/b/text()').extract()

item['course'] = table.select('tr[1]/td/b/text()').re('([A-Z]{2,5} \d{3})')

item['offered'] = table.select('tr[3]/td').re('.*\[.*Offered: (F|W|S)+,* *(F|W|S)*,* *(F|W|S)*\]')

item['prereq'] = table.select('tr[5]/td/i/text()').re('([A-Z]{2,5} \d{3})')

items.append(item)

return items

SPIDER = UcalendarSpider()

There are several things to note:

- The prereq field here cannot identify “For Software Engineering students only”. The regular expression only matches the course code.

- Offered, unlike other fields, can contain more than one item

- Prereq may be empty

Finally, the spider pipes its results to an output format. CSV format meets the requirements, as it can be inserted into a database.

class CsvWriterPipeline(object):

def __init__(self):

self.csvwriter = csv.writer(open('items.csv', 'wb'))

def process_item(self, spider, item):

try:

self.csvwriter.writerow([item['course'][0], item['name'][0], item['desc'][0], item['prereq'][0], item['offered'][0]])

except IndexError:

self.csvwriter.writerow([item['course'][0], item['name'][0], item['desc'][0], ' '.join(item['prereq']), ' '.join(item['offered'])])

return item

Two gotchas:

- Because prereq might be empty, there needs to be an exception handler

- Offered may be variable length. The list needs to be joined to output all of the terms the course is offered.

This part of the project was done in 2 hours with Scrapy. The project can be found in the downloads section.