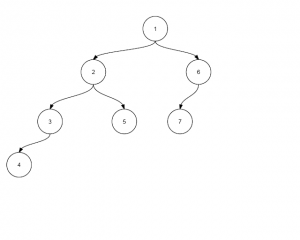

While working on the tree part of the coursetree project, I ran into the question of how to display the course dependencies. I have written a recursive function that returns a recursive list of course prerequisites:

[u'SE 112', [u'MATH 135', []]]

I wanted to turn it into a diagram of course prerequisites and decided 2 flat lists could retain the data:

- a list for the levels

- a list for connections between courses

For example,

would translate to

and

[(1,2),(2,3),(2,5),(3,4),(1,6),(6,7)]

Separating the Levels

We start off with a recursive list representing the tree structure:

[1,[2,6,[3,5,[4,[]],[]],[7,[]]]]

To tackle the problem, I first solved a similar one: flattening a list.

This can easily be done in Scheme, as syntax and type declarations do not get in the way.

(define (flatten sequence)

(cond ((null? sequence) '()) ; simplest case: ()

((list? (car sequence)) ; a list in front: ((1) ...)

(append (flatten (car sequence))

(flatten (cdr sequence))))

(else (cons (car sequence) ; an atom in front: (1 ...)

(flatten (cdr sequence))))))

> (flatten '(1 (2 3)))

(list 1 2 3)

Here’s the same code translated into Python:

car = lambda lst: lst[0]

cdr = lambda lst: lst[1:]

def flatten(seq):

if not seq:

return list()

elif isinstance(car(seq), list):

return flatten(car(seq)).extend(flatten(cdr(seq)))

else:

return [car(seq), flatten(cdr(seq))]

Unfortunately, flatten in Python produces a hypernested structure for flat lists, as (1 2 3) in Scheme is equibalent to (cons 1 (cons 2 (cons 3 empty))) or (1 . (2 . 3)).

>>> flatten([1,2,3])

[1, [2, [3, []]]]

The more serious problem is that it exposes a quirk in Python:

Yes, that means the list disappears after being extended with an empty list . . . one of those unpleasant surprises.

So let’s redefine a flat list in Python:

def flatten(tree):

result = []

for node in tree:

if isinstance(node, list):

result.extend(flatten(node))

else:

result.append(node)

return result

>>> flatten([1,2,3])

[1,2,3]

>>> flatten([1,[2,3]])

[1,2,3]

There is a similarity between this approach and the recursive one: different actions are taken for a list node and other nodes. I used a combined functional and imperative approach to solve the problem:

car = lambda lst: lst[0]

cdr = lambda lst: lst[1:]

'''

depth: returns the maximum nesting level of a list

Given:

ls, a list

Result:

an integer

'''

def depth(ls):

if not ls:

return 0

elif isinstance(car(ls),list):

return max(depth(car(ls))+1,depth(cdr(ls)))

else:

return max(1,depth(cdr(ls)))

'''

strip: returns the list elements of a list

Given:

ls, a list

Result:

ls, the modified list

'''

def strip(ls, top):

if top:

for item in top:

if item in ls:

ls.remove(item)

elif cdr(ls):

ls = car(ls) + strip(cdr(ls), top) # case like [[1], ...]

else:

ls = car(ls) # case like [[1]]

return ls

'''

level: returns the top level elements of a list

Given:

ls, a list

Result:

a new list

'''

def level(ls):

if not ls:

return []

elif not isinstance(car(ls),list):

return [car(ls)] + level(cdr(ls))

else:

return level(cdr(ls))

'''

levelize: returns a list of lists, each list is contains the items of a level

Given:

ls, a list

Result:

a new list

'''

def levelize(ls):

result = []

a = list(ls)

for i in range(2*depth(ls)):

if not i%2:

result.append(level(a))

a = strip(a, level(a))

return result

>>> levelize([1,[2,6,[3,5,[4,[]],[]],[7,[]]]])

[[1], [2, 6], [3, 5, 7], [4]]

Connecting the Nodes

We start off with a recursive list representing the tree structure, slightly different from the list for separating the levels:

Again, a mix of recursion and iteration easily solves the problem:

'''

pair: returns a list of lists, each list has an odd and even pair

Given:

ls, a list

Result:

a list

'''

def pair(ls):

result = []

while ls:

result.append(ls[0:2])

ls = ls[2:]

return result

'''

connect: returns a list of tuples, each tuple represents an edge of the graph

Given:

ls, a list

Result:

a list of tuples

'''

def connect(ls):

result = []

if cdr(ls):

if cdr(cdr(ls)):

for item in pair(ls):

result.extend(connect(item))

else:

second = car(cdr(ls))

for item in level(second):

result.append((car(ls),item))

result.extend(connect(second))

return result

>>> connect([1,[2,[3,[4],5],6,[7]]])

[(1, 2), (1, 6), (2, 3), (2, 5), (3, 4), (6, 7)]

Hopefully, you’ve had fun reading this article and meanwhile came up with a better way to represent the tree as a flat structure.