Tokenizing Prerequisites

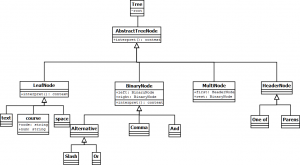

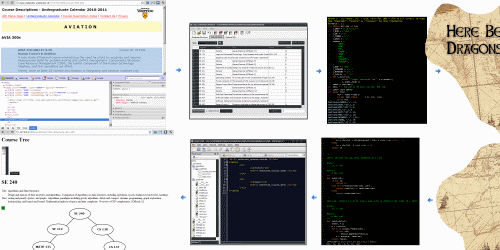

I’m taking a bite by bite approach to interpreting the course prerequisites from the scraper to make its storage in the database possible. Meanwhile, I already finished the part of it that retrieves the data recursively. The approach I decide to take is the same as building a compiler.

I previously favored a quick approach such as the interpreter pattern.

However, commas in sentences have different meanings depending on the context.

(BIOL 140, BIOL 208 or 330) and (BIOL 308 or 330)

(CS 240 or SE 240), CS 246, ECE 222

The interpreter pattern assumes a symbol only has one meaning. Thanks again to a course I took, I was already familiar with the problem and the solution. Recognizing and defining the problem was the hard part. A quick overview of the steps involved from start to finish:

First step in the compiling process is to tokenize input. That takes care of blobs such as “Level at least 3A; Not open to General Mathematics students.”. Totally useless in terms of prerequisites. So it should not show up in the token string.

Building the lexer was as simple as specifying the token list, writing regular expressions for them, and setting ignored characters.

t_DEPT = r'[A-Z]{2,5}'

t_NUM = r'\d{3}'

t_OR = r'or'

t_COMMA = r','

t_SEMI = r';'

tokens = (

'DEPT',

'NUM',

'OR',

'COMMA',

'SEMI',

'AND',

'LPARENS',

'RPARENS',

)

t_AND = r'and'

t_LPARENS = r'\('

t_RPARENS = r'\)'

t_ignore = ' \t'

def t_error(t):

print "Illegal character '%s'" % t.value[0]

t.lexer.skip(1)

lexer = lex.lex()

data = ' (CS 240 or SE 240), CS 246, ECE 222'

lexer.input(data)

while True:

tok = lexer.token()

if not tok: break # No more input

print tok

Running it gives the output:

LexToken(LPARENS,'(',1,1)

LexToken(DEPT,'CS',1,2)

LexToken(NUM,'240',1,5)

LexToken(OR,'or',1,9)

LexToken(DEPT,'SE',1,12)

LexToken(NUM,'240',1,15)

LexToken(RPARENS,')',1,18)

LexToken(COMMA,',',1,19)

LexToken(DEPT,'CS',1,21)

LexToken(NUM,'246',1,24)

LexToken(COMMA,',',1,27)

LexToken(DEPT,'ECE',1,29)

LexToken(NUM,'222',1,33)