Benchmarking Browsers by Page Load Times

Recent comparisons of browsers focus on JavaScript speed. There are many ways to measure browser performance, including image load time, reloading from cache, start time, and css rendering speed. While Opera was in the lead a few years ago, it has been branded as the fastest browser again.

You should notice the ad on the left for Chrome. So which one is the fastest? I ran the tests on Linux and Windows.

Benchmark Method

There’s nothing special about using JavaScript to detect when onload is fired by the browser, as long as the browser follows the convention that it is fired after the page is loaded. This used to be an issue I noted to the point of just using Firefox and ignoring other browsers. According to this article, it was fixed 2 years ago. So I took the benchmark from the site and ran it. Not surprisingly, Firefox came out highest on the score. Actually, that’s a negative score. Ideally, the page takes no time to load.

Linux

Google Chrome for Linux is different from the one for Windows, as the benchmark would load the first site for the first time, but could not time it nor reload it. Opera got stuck on MySpace once and kept adding more elements to the page, possibly due to an ad that is not usually loaded locally. I ran these tests on Sabayon Linux with kernel 2.6.30 (I expect 2.6.33 to be faster, since it has been patched with Con Koliva’s kernel enhancements). An interesting note here, not seen in other parts of the result set, is that Arora took longer to load pages on the first time, but was faster on all subsequent reloads. The total score for Arora comes second to Opera. On another note, Firefox with the same extensions, ran faster on Linux than Windows.

| Firefox 3.6.3 | Arora 0.10.2 | |

| Beginning Benchmark | Beginning Benchmark | |

| baidu.com.htm | baidu.com.htm | |

| 692 | 1461 | |

| 356 | 47 | |

| 348 | 37 | |

| 338 | 49 | |

| 352 | 25 | |

| 345 | 26 | |

| 1046 | 26 | |

| 356 | 27 | |

| 344 | 28 | |

| 356 | 27 | |

| Site Average: 453.3 | Site Average: 175.3 | |

| blogger.com.htm | blogger.com.htm | |

| 445 | 2408 | |

| 285 | 202 | |

| 283 | 205 | |

| 269 | 206 | |

| 272 | 197 | |

| 283 | 197 | |

| 262 | 200 | |

| 271 | 190 | |

| 262 | 194 | |

| 259 | 193 | |

| Site Average: 289.1 | Site Average: 419.2 | |

| facebook.com.htm | facebook.com.htm | |

| 472 | 515 | |

| 450 | 315 | |

| 447 | 305 | |

| 628 | 304 | |

| 463 | 309 | |

| 476 | 314 | |

| 469 | 319 | |

| 579 | 316 | |

| 459 | 318 | |

| 453 | 319 | |

| Site Average: 489.6 | Site Average: 333.4 | |

| google.com.htm | google.com.htm | |

| 123 | 84 | |

| 109 | 21 | |

| 96 | 20 | |

| 108 | 21 | |

| 102 | 21 | |

| 106 | 21 | |

| 94 | 22 | |

| 91 | 21 | |

| 94 | 22 | |

| 94 | 21 | |

| Site Average: 101.7 | Site Average: 27.4 | |

| havenworks.com.htm | havenworks.com.htm | |

| 3639 | 4103 | |

| 2587 | 217 | |

| 2793 | 202 | |

| 2598 | 202 | |

| 2635 | 203 | |

| 2614 | 203 | |

| 2612 | 203 | |

| 2594 | 204 | |

| 2585 | 207 | |

| 2572 | 216 | |

| Site Average: 2722.9 | Site Average: 596 | |

| live.com.htm | live.com.htm | |

| 305 | 413 | |

| 153 | 101 | |

| 154 | 79 | |

| 157 | 82 | |

| 152 | 74 | |

| 159 | 84 | |

| 160 | 74 | |

| 166 | 79 | |

| 143 | 76 | |

| 149 | 89 | |

| Site Average: 169.8 | Site Average: 115.1 | |

| myspace.com.tom.htm | myspace.com.tom.htm | |

| 1429 | 1965 | |

| 1230 | 759 | |

| 1233 | 778 | |

| 1235 | 764 | |

| 1243 | 818 | |

| 1229 | 753 | |

| 1227 | 766 | |

| 1275 | 775 | |

| 1269 | 774 | |

| 1224 | 769 | |

| Site Average: 1259.4 | Site Average: 892.1 | |

| reddit.com.htm | reddit.com.htm | |

| 604 | 586 | |

| 557 | 399 | |

| 542 | 397 | |

| 541 | 392 | |

| 523 | 404 | |

| 513 | 393 | |

| 766 | 400 | |

| 521 | 385 | |

| 533 | 386 | |

| 525 | 382 | |

| Site Average: 562.5 | Site Average: 412.4 | |

| wikipedia.org.htm | wikipedia.org.htm | |

| 670 | 4110 | |

| 242 | 36 | |

| 232 | 49 | |

| 231 | 34 | |

| 470 | 34 | |

| 232 | 31 | |

| 236 | 30 | |

| 229 | 32 | |

| 227 | 31 | |

| 239 | 49 | |

| Site Average: 300.8 | Site Average: 443.6 | |

| Benchmark Complete | ||

| Score | 705.455555555556 | 379.388888888889 |

| First Page Load Average | 931 | 1738.33333333333 |

| Website | http://gentoo-portage.com/www-client/mozilla-firefox | http://gentoo-portage.com/www-client/arora |

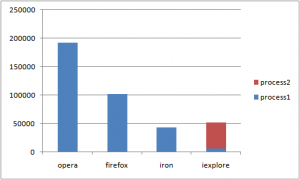

Windows

Not surprisingly, the winners on Windows were 32 bit browsers. Aside from the small speed increase due to smaller pointer sizes in 32 bit applications, I think Opera and Chrome are faster browsers, as they advertise. A surprising result is that 64 bit IE ran faster than 64 bit Firefox. I noticed that a while ago, but decided to stick with Firefox because it has add-ons. Iron is a stripped down version of Chrome compiled from source. It should be slightly faster, with the slimmer binary and no personal tracking features. I ran this on Windows 7 Pro 64 bit Version 6.1 (Build 7600).

| Firefox 3.6.3 | Opera 10.52 | Iron 4.0.280 | Internet Explorer | |

| Beginning Benchmark | Beginning Benchmark | Beginning Benchmark | Beginning Benchmark | |

| baidu.com.htm | baidu.com.htm | baidu.com.htm | baidu.com.htm | |

| 863 | 720 | 783 | 835 | |

| 409 | 12 | 9 | 42 | |

| 402 | 12 | 7 | 44 | |

| 407 | 11 | 8 | 44 | |

| 385 | 12 | 9 | 38 | |

| 391 | 11 | 8 | 42 | |

| 407 | 12 | 9 | 38 | |

| 403 | 11 | 8 | 41 | |

| 401 | 12 | 9 | 36 | |

| 405 | 11 | 11 | 42 | |

| Site Average: 447.3 | Site Average: 82.4 | Site Average: 86.1 | Site Average: 120.2 | |

| blogger.com.htm | blogger.com.htm | blogger.com.htm | blogger.com.htm | |

| 398 | 220 | 333 | 220 | |

| 143 | 72 | 38 | 90 | |

| 140 | 69 | 38 | 85 | |

| 138 | 73 | 38 | 85 | |

| 140 | 70 | 41 | 86 | |

| 140 | 72 | 37 | 75 | |

| 150 | 70 | 42 | 76 | |

| 140 | 72 | 36 | 72 | |

| 140 | 70 | 41 | 73 | |

| 138 | 72 | 37 | 85 | |

| Site Average: 166.7 | Site Average: 86 | Site Average: 68.1 | Site Average: 94.7 | |

| facebook.com.htm | facebook.com.htm | facebook.com.htm | facebook.com.htm | |

| 476 | 267 | 273 | 356 | |

| 397 | 289 | 128 | 275 | |

| 368 | 224 | 133 | 282 | |

| 489 | 222 | 129 | 283 | |

| 359 | 223 | 127 | 280 | |

| 356 | 222 | 127 | 287 | |

| 353 | 223 | 125 | 280 | |

| 453 | 232 | 126 | 281 | |

| 363 | 227 | 125 | 288 | |

| 352 | 222 | 128 | 277 | |

| Site Average: 396.6 | Site Average: 235.1 | Site Average: 142.1 | Site Average: 288.9 | |

| google.com.htm | google.com.htm | google.com.htm | google.com.htm | |

| 135 | 40 | 21 | 70 | |

| 70 | 13 | 11 | 52 | |

| 70 | 14 | 11 | 37 | |

| 70 | 13 | 10 | 46 | |

| 70 | 14 | 10 | 37 | |

| 70 | 14 | 11 | 48 | |

| 70 | 14 | 11 | 43 | |

| 70 | 13 | 11 | 34 | |

| 70 | 14 | 11 | 36 | |

| 70 | 14 | 11 | 50 | |

| Site Average: 76.5 | Site Average: 16.3 | Site Average: 11.8 | Site Average: 45.3 | |

| havenworks.com.htm | havenworks.com.htm | havenworks.com.htm | havenworks.com.htm | |

| 4024 | 867 | 736 | 2325 | |

| 2863 | 655 | 279 | 2232 | |

| 2873 | 659 | 278 | 2231 | |

| 2866 | 665 | 280 | 2259 | |

| 2852 | 700 | 282 | 2291 | |

| 2855 | 663 | 285 | 2480 | |

| 2880 | 650 | 276 | 2297 | |

| 2930 | 668 | 280 | 2251 | |

| 2866 | 666 | 277 | 2225 | |

| 2860 | 654 | 279 | 2233 | |

| Site Average: 2986.9 | Site Average: 684.7 | Site Average: 325.2 | Site Average: 2282.4 | |

| live.com.htm | live.com.htm | live.com.htm | live.com.htm | |

| 254 | 280 | 235 | 125 | |

| 95 | 75 | 38 | 132 | |

| 95 | 78 | 42 | 131 | |

| 107 | 75 | 38 | 125 | |

| 98 | 77 | 39 | 137 | |

| 96 | 77 | 40 | 125 | |

| 96 | 74 | 40 | 104 | |

| 96 | 74 | 40 | 99 | |

| 96 | 74 | 39 | 115 | |

| 95 | 76 | 38 | 103 | |

| Site Average: 112.8 | Site Average: 96 | Site Average: 58.9 | Site Average: 119.6 | |

| myspace.com.tom.htm | myspace.com.tom.htm | myspace.com.tom.htm | myspace.com.tom.htm | |

| 1253 | 1489 | 1573 | 2032 | |

| 927 | 1377 | 3451 | 1287 | |

| 928 | 1541 | 1131 | 4756 | |

| 1535 | 3978 | 1902 | 1749 | |

| 958 | 1111 | 1710 | 1436 | |

| 941 | 1080 | 1622 | 1152 | |

| 928 | 1103 | 4948 | 1918 | |

| 924 | 1081 | 2927 | 1222 | |

| 952 | 1388 | 1713 | 1230 | |

| 930 | 1049 | 3505 | 1260 | |

| Site Average: 1027.6 | Site Average: 1519.7 | Site Average: 2448.2 | Site Average: 1804.2 | |

| reddit.com.htm | reddit.com.htm | reddit.com.htm | reddit.com.htm | |

| 552 | 246 | 259 | 541 | |

| 425 | 166 | 167 | 463 | |

| 424 | 163 | 165 | 483 | |

| 418 | 164 | 167 | 478 | |

| 608 | 164 | 166 | 478 | |

| 429 | 165 | 166 | 480 | |

| 424 | 165 | 166 | 485 | |

| 422 | 165 | 166 | 481 | |

| 432 | 164 | 167 | 495 | |

| 425 | 164 | 167 | 483 | |

| Site Average: 455.9 | Site Average: 172.6 | Site Average: 175.6 | Site Average: 486.7 | |

| wikipedia.org.htm | wikipedia.org.htm | wikipedia.org.htm | wikipedia.org.htm | |

| 1105 | 726 | 955 | 967 | |

| 166 | 78 | 34 | 260 | |

| 164 | 66 | 33 | 255 | |

| 163 | 66 | 34 | 262 | |

| 163 | 66 | 32 | 253 | |

| 165 | 67 | 32 | 260 | |

| 163 | 71 | 35 | 258 | |

| 164 | 72 | 36 | 256 | |

| 162 | 71 | 33 | 267 | |

| 163 | 71 | 34 | 256 | |

| Site Average: 257.8 | Site Average: 135.4 | Site Average: 125.8 | Site Average: 329.4 | |

| Benchmark Complete | ||||

| Score | 658.677777777778 | 336.466666666667 | 382.422222222222 | 619.044444444444 |

| First Page Load Average | 1006.66666666667 | 539.444444444445 | 574.222222222222 | 830.111111111111 |

| Website | www.mozilla-x86-64.com/ | http://www.opera.com/ | http://www.srware.net/en/software_srware_iron.php |

Conclusion

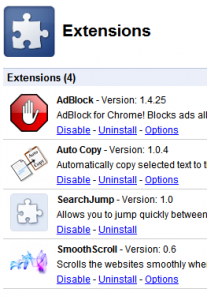

Opera beat all other browsers, just with its default settings. A little more tuning of redraw rate and memory use could improve the score. I expect the real results when browsing to deviate. Chrome and Firefox has DNS prefetching, Firefox and Opera have pipelining. To improve that DNS fetch speed in Opera, you can set your system to use OpenDNS to resolve domain names.

Google Chrome for Linux is different from the one for Windows, as the benchmark would load the first site for the first time, but could not time it nor reload it. Opera got stuck on MySpace once and kept adding more elements to the page, possibly due to an ad that is not usually loaded locally. I ran these tests on Sabayon Linux with kernel 2.6.30 (I expect 2.6.33 to be faster, since it has been patched with Con Koliva’s kernel enhancements). An interesting note here, not seen in other parts of the result set, is that Arora took longer to load pages on the first time, but was faster on all subsequent reloads. The total score for Arora comes second to Opera. On another note, Firefox with the same extensions, ran faster on Linux than Windows.

After the Benchmark (you should decide which browser to use)

I measured the memory use!

It looks Chrome and IE were designed for really cheap laptops. (They can’t run on old computers with Windows 2000.)